Abstract

2401.09621.pdf (arxiv.org)

arXiv:2401.09621v1 [cs.DB] 17 Jan 2024

Contemporary approaches to data management are increasingly

relying on unified analytics and AI platforms to foster collabora

tion, interoperability, seamless access to reliable data, and high

performance. Data Lakes featuring open standard table formats

such as Delta Lake, Apache Hudi, and Apache Iceberg are central

components of these data architectures. Choosing the right format

for managing a table is crucial for achieving the objectives men

tioned above. The challenge lies in selecting the best format, a task

that is onerous and can yield temporary results, as the ideal choice

may shift over time with data growth, evolving workloads, and the

competitive development of table formats and processing engines.

Moreover, restricting data access to a single format can hinder data

sharing resulting in diminished business value over the long term.

The ability to seamlessly interoperate between formats and with

negligible overhead can effectively address these challenges. Our

solution in this direction is an innovative omni-directional transla

tor, XTable, that facilitates writing data in one format and reading

it in any format, thus achieving the desired format interoperability.

In this work, we demonstrate the effectiveness of XTable through

application scenarios inspired by real-world use cases

Autor: Andreas Schneider

How to Detect Locking and Blocking In Your Analysis Services Environment

How to Detect Locking and Blocking In Your Analysis Services Environment – byoBI.com (wordpress.com)

3 Methods for Shredding Analysis Services Extended Events – byoBI.com (wordpress.com)

Introduction To Analysis Services Extended Events – Mark Vaillancourt (markvsql.com)

<!-- This script supplied by Bill Anton http://byobi.com/blog/2013/06/extended-events-for-analysis-services/ -->

<Create

xmlns="http://schemas.microsoft.com/analysisservices/2003/engine"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:ddl2="http://schemas.microsoft.com/analysisservices/2003/engine/2"

xmlns:ddl2_2="http://schemas.microsoft.com/analysisservices/2003/engine/2/2"

xmlns:ddl100_100="http://schemas.microsoft.com/analysisservices/2008/engine/100/100"

xmlns:ddl200_200="http://schemas.microsoft.com/analysisservices/2010/engine/200/200"

xmlns:ddl300_300="http://schemas.microsoft.com/analysisservices/2011/engine/300/300">

<ObjectDefinition>

<Trace>

<ID>MyTrace</ID>

<!--Example: <ID>QueryTuning_20130624</ID>-->

<Name>MyTrace</Name>

<!--Example: <Name>QueryTuning_20130624</Name>-->

<ddl300_300:XEvent>

<event_session name="xeas"

dispatchLatency="1"

maxEventSize="4"

maxMemory="4"

memoryPartitionMode="none"

eventRetentionMode="allowSingleEventLoss"

trackCausality="true">

<!-- ### COMMAND EVENTS ### -->

<!--<event package="AS" name="CommandBegin" />-->

<!--<event package="AS" name="CommandEnd" />-->

<!-- ### DISCOVER EVENTS ### -->

<!--<event package="AS" name="DiscoverBegin" />-->

<!--<event package="AS" name="DiscoverEnd" />-->

<!-- ### DISCOVER SERVER STATE EVENTS ### -->

<!--<event package="AS" name="ServerStateDiscoverBegin" />-->

<!--<event package="AS" name="ServerStateDiscoverEnd" />-->

<!-- ### ERRORS AND WARNING ### -->

<!--<event package="AS" name="Error" />-->

<!-- ### FILE LOAD AND SAVE ### -->

<!--<event package="AS" name="FileLoadBegin" />-->

<!--<event package="AS" name="FileLoadEnd" />-->

<!--<event package="AS" name="FileSaveBegin" />-->

<!--<event package="AS" name="FileSaveEnd" />-->

<!--<event package="AS" name="PageInBegin" />-->

<!--<event package="AS" name="PageInEnd" />-->

<!--<event package="AS" name="PageOutBegin" />-->

<!--<event package="AS" name="PageOutEnd" />-->

<!-- ### LOCKS ### -->

<!--<event package="AS" name="Deadlock" />-->

<!--<event package="AS" name="LockAcquired" />-->

<!--<event package="AS" name="LockReleased" />-->

<!--<event package="AS" name="LockTimeout" />-->

<!--<event package="AS" name="LockWaiting" />-->

<!-- ### NOTIFICATION EVENTS ### -->

<!--<event package="AS" name="Notification" />-->

<!--<event package="AS" name="UserDefined" />-->

<!-- ### PROGRESS REPORTS ### -->

<!--<event package="AS" name="ProgressReportBegin" />-->

<!--<event package="AS" name="ProgressReportCurrent" />-->

<!--<event package="AS" name="ProgressReportEnd" />-->

<!--<event package="AS" name="ProgressReportError" />-->

<!-- ### QUERY EVENTS ### -->

<!--<event package="AS" name="QueryBegin" />-->

<event package="AS" name="QueryEnd" />

<!-- ### QUERY PROCESSING ### -->

<!--<event package="AS" name="CalculateNonEmptyBegin" />-->

<!--<event package="AS" name="CalculateNonEmptyCurrent" />-->

<!--<event package="AS" name="CalculateNonEmptyEnd" />-->

<!--<event package="AS" name="CalculationEvaluation" />-->

<!--<event package="AS" name="CalculationEvaluationDetailedInformation" />-->

<!--<event package="AS" name="DaxQueryPlan" />-->

<!--<event package="AS" name="DirectQueryBegin" />-->

<!--<event package="AS" name="DirectQueryEnd" />-->

<!--<event package="AS" name="ExecuteMDXScriptBegin" />-->

<!--<event package="AS" name="ExecuteMDXScriptCurrent" />-->

<!--<event package="AS" name="ExecuteMDXScriptEnd" />-->

<!--<event package="AS" name="GetDataFromAggregation" />-->

<!--<event package="AS" name="GetDataFromCache" />-->

<!--<event package="AS" name="QueryCubeBegin" />-->

<!--<event package="AS" name="QueryCubeEnd" />-->

<!--<event package="AS" name="QueryDimension" />-->

<!--<event package="AS" name="QuerySubcube" />-->

<!--<event package="AS" name="ResourceUsage" />-->

<!--<event package="AS" name="QuerySubcubeVerbose" />-->

<!--<event package="AS" name="SerializeResultsBegin" />-->

<!--<event package="AS" name="SerializeResultsCurrent" />-->

<!--<event package="AS" name="SerializeResultsEnd" />-->

<!--<event package="AS" name="VertiPaqSEQueryBegin" />-->

<!--<event package="AS" name="VertiPaqSEQueryCacheMatch" />-->

<!--<event package="AS" name="VertiPaqSEQueryEnd" />-->

<!-- ### SECURITY AUDIT ### -->

<!--<event package="AS" name="AuditAdminOperationsEvent" />-->

<event package="AS" name="AuditLogin" />

<!--<event package="AS" name="AuditLogout" />-->

<!--<event package="AS" name="AuditObjectPermissionEvent" />-->

<!--<event package="AS" name="AuditServerStartsAndStops" />-->

<!-- ### SESSION EVENTS ### -->

<!--<event package="AS" name="ExistingConnection" />-->

<!--<event package="AS" name="ExistingSession" />-->

<!--<event package="AS" name="SessionInitialize" />-->

<target package="Package0" name="event_file">

<!-- Make sure SSAS instance Service Account can write to this location -->

<parameter name="filename" value="C:\SSASExtendedEvents\MyTrace.xel" />

<!--Example: <parameter name="filename" value="C:\Program Files\Microsoft SQL Server\MSAS11.SSAS_MD\OLAP\Log\trace_results.xel" />-->

</target>

</event_session>

</ddl300_300:XEvent>

</Trace>

</ObjectDefinition>

</Create>

/****

Base query provided by Francesco De Chirico

http://francescodechirico.wordpress.com/2012/08/03/identify-storage-engine-and-formula-engine-bottlenecks-with-new-ssas-xevents-5/

****/

SELECT

xe.TraceFileName

, xe.TraceEvent

, xe.EventDataXML.value('(/event/data[@name="EventSubclass"]/value)[1]','int') AS EventSubclass

, xe.EventDataXML.value('(/event/data[@name="ServerName"]/value)[1]','varchar(50)') AS ServerName

, xe.EventDataXML.value('(/event/data[@name="DatabaseName"]/value)[1]','varchar(50)') AS DatabaseName

, xe.EventDataXML.value('(/event/data[@name="NTUserName"]/value)[1]','varchar(50)') AS NTUserName

, xe.EventDataXML.value('(/event/data[@name="ConnectionID"]/value)[1]','int') AS ConnectionID

, xe.EventDataXML.value('(/event/data[@name="StartTime"]/value)[1]','datetime') AS StartTime

, xe.EventDataXML.value('(/event/data[@name="EndTime"]/value)[1]','datetime') AS EndTime

, xe.EventDataXML.value('(/event/data[@name="Duration"]/value)[1]','bigint') AS Duration

, xe.EventDataXML.value('(/event/data[@name="TextData"]/value)[1]','varchar(max)') AS TextData

FROM

(

SELECT

[FILE_NAME] AS TraceFileName

, OBJECT_NAME AS TraceEvent

, CONVERT(XML,Event_data) AS EventDataXML

FROM sys.fn_xe_file_target_read_file ( 'C:\SSASExtendedEvents\MyTrace*.xel', null, null, null )

) xe

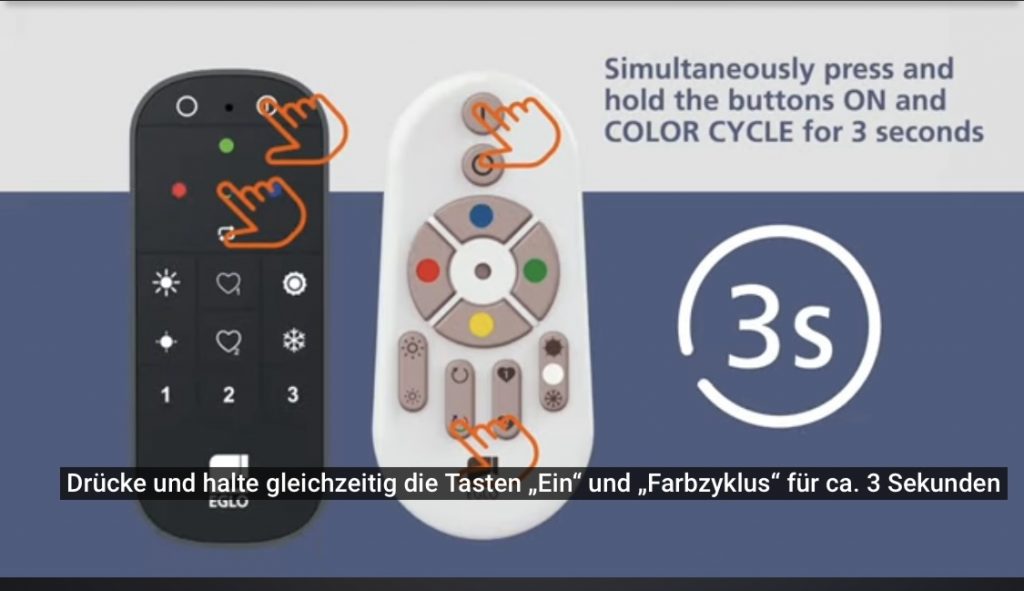

Reset Remote Control 99099 – RCU

DBT (Data Build Tool)

DBT (Data Build Tool) ist ein Kommandozeilen-Tool, das Software-Entwicklern und Datenanalysten hilft, ihre Datenverarbeitungs-Workflows effizienter zu gestalten. Es wird verwendet, um Daten-Transformationen zu definieren, zu testen und zu orchestrieren, die in modernen Data-Warehouses ausgeführt werden. Hier sind einige Schlüsselaspekte, die erklären, welchen Zweck DBT im Daten-Umfeld hat:

- Transformationen als Code: DBT ermöglicht es Ihnen, Daten-Transformationen als Code zu schreiben, zu versionieren und zu verwalten. Dies fördert die Best Practices der Softwareentwicklung wie Code-Reviews, Versionskontrolle und Continuous Integration/Continuous Deployment (CI/CD) im Kontext der Datenverarbeitung.

- Modularität und Wiederverwendbarkeit: Mit DBT können Sie Transformationen in modularer Form erstellen, sodass sie leicht wiederverwendbar und wartbar sind. Dies verbessert die Konsistenz und Effizienz der Daten-Transformationen.

- Automatisierung: DBT automatisiert den Workflow von der Rohdatenverarbeitung bis zur Erstellung von Berichtsdaten. Es führt Transformationen in einer bestimmten Reihenfolge aus, basierend auf den Abhängigkeiten zwischen den verschiedenen Datenmodellen.

- Tests und Datenqualität: DBT unterstützt das Testen von Daten, um sicherzustellen, dass sie korrekt transformiert werden. Sie können Tests für Datenmodelle definieren, um Datenintegrität und -qualität zu gewährleisten.

- Dokumentation: DBT generiert automatisch eine Dokumentation der Datenmodelle, was für die Transparenz und das Verständnis der Datenverarbeitungsprozesse innerhalb eines Teams oder Unternehmens wichtig ist.

- Performance-Optimierung: Durch die effiziente Nutzung der Ressourcen moderner Data-Warehouses ermöglicht DBT eine schnelle Verarbeitung großer Datenmengen, was zur Leistungsoptimierung beiträgt.

- Integration mit Data-Warehouses: DBT ist kompatibel mit einer Vielzahl von modernen Data-Warehouses wie Snowflake, BigQuery, Redshift und anderen, was die Integration in bestehende Dateninfrastrukturen erleichtert.

Kurz gesagt, DBT hilft dabei, den Prozess der Daten-Transformation zu vereinfachen, zu automatisieren und zu verbessern, was zu effizienteren und zuverlässigeren Datenverarbeitungs-Workflows führt.

DBT kann sowohl in Cloud-Umgebungen als auch on-premises verwendet werden. Es ist nicht auf Cloud-Lösungen beschränkt. Die Kernfunktionalität von DBT, die auf der Kommandozeilen-Interface basiert, kann auf jedem System installiert und ausgeführt werden, das Python unterstützt. Somit ist DBT flexibel einsetzbar, unabhängig davon, ob Ihre Dateninfrastruktur in der Cloud oder in einem lokalen Rechenzentrum (on-premises) gehostet wird.

Wenn Sie DBT on-premises nutzen möchten, müssen Sie sicherstellen, dass Ihr Datenlager oder Ihre Datenbank on-premises unterstützt wird. DBT unterstützt eine Vielzahl von Datenbanken und Data-Warehouses, einschließlich solcher, die üblicherweise on-premises eingesetzt werden.

Es ist wichtig zu beachten, dass, während DBT selbst on-premises laufen kann, bestimmte Zusatzfunktionen oder -produkte, wie dbt Cloud, eine SaaS-Lösung darstellen, die spezifische Cloud-basierte Vorteile bietet, wie eine integrierte Entwicklungsumgebung und erweiterte Orchestrierungs- und Monitoring-Tools. Die Entscheidung, ob Sie DBT on-premises oder in der Cloud nutzen, hängt letztendlich von Ihrer spezifischen Dateninfrastruktur und Ihren geschäftlichen Anforderungen ab.

DBT (Data Build Tool) ist in seiner Kernversion ein Open-Source-Tool. Entwickelt von der Firma Fishtown Analytics (jetzt dbt Labs), ermöglicht es Analysten und Entwicklern, Transformationen in ihrem Data Warehouse durchzuführen, indem SQL-Code verwendet wird, der in einer Versionierungsumgebung verwaltet wird. Dies fördert die Anwendung von Softwareentwicklungspraktiken wie Code-Reviews und Versionskontrolle im Bereich der Datenanalyse.

Die Open-Source-Version von DBT kann kostenlos genutzt werden und bietet die grundlegenden Funktionen, die notwendig sind, um Daten-Transformationen zu definieren, zu testen und auszuführen. Es gibt auch eine kommerzielle Version, dbt Cloud, die zusätzliche Features bietet, wie eine Web-basierte IDE, erweiterte Scheduling-Optionen und bessere Team-Kollaborationswerkzeuge.

Das Open-Source-Projekt ist auf GitHub verfügbar, wo Benutzer den Code einsehen, eigene Beiträge leisten und die Entwicklung der Software verfolgen können. Dies fördert Transparenz und Gemeinschaftsbeteiligung, zwei Schlüsselaspekte der Open-Source-Philosophie.

Comparison of the Open Source Query Engines: Trino and StarRocks

StarRocks is a Native Vectorized Engine implemented in C++, while Trino is implemented in Java and uses limited vectorization technology. Vectorization technology helps StarRocks utilize CPU processing power more efficiently. This type of query engine has the following characteristics:

- It can fully utilize the efficiency of columnar data management. This type of query engine reads data from columnar storage, and the way they manage data in memory, as well as the way operators process data, is columnar. Such engines can use the CPU cache more effectively, improving CPU execution efficiency.

- It can fully utilize the SIMD instructions supported by the CPU. This allows the CPU to complete more data calculations in fewer clock cycles. According to data provided by StarRocks, using vectorized instructions can improve overall performance by 3-10 times.

- It can compress data more efficiently to greatly reduce memory usage. This makes this type of query engine more capable of handling large data volume query requests.

In fact, Trino is also exploring vectorization technology. Trino has some SIMD code, but it’s behind compared to StarRocks in terms of depth and coverage. Trino is still working on improving their vectorization efforts (read https://github.com/trinodb/trino/issues/14237). Meta’s Velox project aims to use vectorization technology to accelerate Trino queries. However, so far, very few companies have formally used Velox in production environments.

Comparison of the Open Source Query Engines: Trino and StarRocks

Comparison of the Open Source Query Engines: Trino and StarRocks

The difference between trino and dremio

Apache Trino (formerly known as PrestoSQL) and Dremio are both distributed query engines, but they are designed with different architectures and use cases in mind. Here’s a comparison of the two:

Apache Trino

- Query Engine: Trino is a distributed SQL query engine designed for interactive analytic queries against various data sources of all sizes, from gigabytes to petabytes. It’s particularly optimized for OLAP (Online Analytical Processing) queries.

- Data Federation: Trino allows querying data where it lives, without the need to move or copy the data. It can query multiple sources simultaneously and supports a wide variety of data sources like HDFS, S3, relational databases, NoSQL databases, and more.

- Performance: Trino is designed for fast query execution and is capable of providing results in seconds. It achieves high performance through in-memory processing and distributed query execution.

- Use Cases: Trino is primarily used for interactive analytics, where users execute complex queries and expect quick results. It’s suitable for data analysts and scientists who need to perform ad-hoc analysis across different data sources.

- Statelessness: Trino’s architecture is stateless, which means it doesn’t store any data itself. It processes queries and retrieves data directly from the source.

Dremio

- Data-as-a-Service Platform: Dremio is not just a query engine; it’s a data-as-a-service platform that provides tools for data exploration, curation, and acceleration. It offers a more integrated solution compared to Trino’s specialized query engine.

- Data Reflections: One of Dremio’s key features is its use of data reflections, which are optimized representations of data that can significantly speed up query performance. These reflections allow Dremio to provide faster responses to queries by avoiding full scans of the underlying data.

- Data Catalog: Dremio includes a data catalog that helps users discover and curate data. It provides a unified view of all data sources, making it easier for users to find and access the data they need.

- Data Lineage: It offers data lineage features, providing visibility into how data is transformed and used across the platform, which is beneficial for governance and compliance.

- Use Cases: Dremio is suited for organizations looking for a comprehensive data platform that can handle data exploration, curation, and query acceleration. It’s beneficial for scenarios where performance optimization and data management are critical.

Summary

- Trino is a high-performance, distributed SQL query engine designed for fast, ad-hoc analytics across various data sources. It’s focused on query execution and is best suited for environments where the primary requirement is to run interactive, complex queries over large datasets.

- Dremio offers a broader set of features beyond just query execution, including data curation, cataloging, and acceleration. It’s designed as a data-as-a-service platform that can help organizations manage and optimize their data for various analytics and BI use cases.

Choosing between Trino and Dremio depends on the specific needs of the organization. If the primary need is fast, ad-hoc query execution across diverse data sources, Trino might be the better choice. If there’s a requirement for a comprehensive data platform with features like data curation, cataloging, and acceleration, Dremio could be more suitable.

The difference between apache flink and apache trino

Apache Flink and Apache Trino (formerly known as PrestoSQL) are both distributed processing systems, but they are designed for different types of workloads and use cases in the big data ecosystem. Here’s a breakdown of their primary differences:

Apache Flink

- Stream Processing: Flink is primarily known for its stream processing capabilities. It can process unbounded streams of data in real-time with high throughput and low latency. Flink provides stateful stream processing, allowing for complex operations like windowing, joins, and aggregations on streams.

- Batch Processing: While Flink is stream-first, it also supports batch processing. Its DataSet API (now part of the unified Batch/Stream API) allows for batch jobs, treating them as a special case of stream processing.

- State Management: Flink has advanced state management capabilities, which are crucial for many streaming applications. It can handle large states efficiently and offers features like state snapshots and fault tolerance.

- APIs and Libraries: Flink offers a variety of APIs (DataStream API, Table API, SQL API) and libraries (CEP for complex event processing, Gelly for graph processing, etc.) for developing complex data processing applications.

- Use Cases: Flink is ideal for real-time analytics, monitoring, and event-driven applications. It’s used in scenarios where low latency and high throughput are critical, and where the application needs to react to data in real-time.

Apache Trino

- SQL Query Engine: Trino is a distributed SQL query engine designed for interactive analytic queries against data sources of all sizes ranging from gigabytes to petabytes. It’s not a database but rather a way to query data across various data sources.

- OLAP Workloads: Trino is optimized for OLAP (Online Analytical Processing) queries and is capable of handling complex analytical queries against large datasets. It’s designed to perform ad-hoc analysis at scale.

- Federation: One of the key features of Trino is its ability to query data from multiple sources seamlessly. This means you can execute queries that join or aggregate data across different databases and storage systems.

- Speed: Trino is designed for fast query execution and can provide results in seconds. It achieves this through techniques like in-memory processing, optimized execution plans, and distributed query execution.

- Use Cases: Trino is used for interactive analytics, where users need to run complex queries and get results quickly. It’s often used for data exploration, business intelligence, and reporting.

Summary

- Flink is best suited for real-time streaming data processing and applications where timely response and state management are crucial.

- Trino excels in fast, ad-hoc analysis over large datasets, particularly when the data is spread across different sources.

Choosing between Flink and Trino depends on the specific requirements of the workload, such as the need for real-time processing, the complexity of the queries, the size of the data, and the latency requirements.

Tools for Thought

Tools for Thought ist eine Übung in retrospektivem Futurismus, d. h. ich habe es Anfang der 1980er Jahre geschrieben, um zu sehen, wie die Mitte der 1990er Jahre aussehen würde. Meine Odyssee begann, als ich Xerox PARC und Doug Engelbart entdeckte und feststellte, dass all die Journalisten, die sich über das Silicon Valley hermachten, die wahre Geschichte verpassten. Ja, die Geschichten über Teenager, die in ihren Garagen neue Industrien erfanden, waren gut. Aber die Idee des Personal Computers ist nicht dem Geist von Steve Jobs entsprungen. Die Idee, dass Menschen Computer zur Erweiterung ihres Denkens und ihrer Kommunikation, als Werkzeuge für intellektuelle Arbeit und soziale Aktivitäten nutzen können, war keine Erfindung der Mainstream-Computerindustrie, der orthodoxen Computerwissenschaft oder gar der Computerbastler. Ohne Leute wie J.C.R. Licklider, Doug Engelbart, Bob Taylor und Alan Kay hätte es das nicht gegeben. Aber ihre Arbeit wurzelte in älteren, ebenso exzentrischen, ebenso visionären Arbeiten, und so habe ich mich damit beschäftigt, wie Boole und Babbage und Turing und von Neumann – vor allem von Neumann – die Grundlagen schufen, auf denen die späteren Erbauer von Werkzeugen aufbauten, um die Zukunft zu schaffen, in der wir heute leben. Man kann nicht verstehen, wohin sich die bewusstseinsverstärkende Technologie entwickelt, wenn man nicht weiß, woher sie kommt.

howard rheingold’s | tools for thought

Tools for Thought is an exercise in retrospective futurism; that is, I wrote it in the early 1980s, attempting to look at what the mid 1990s would be like. My odyssey started when I discovered Xerox PARC and Doug Engelbart and realized that all the journalists who had descended upon Silicon Valley were missing the real story. Yes, the tales of teenagers inventing new industries in their garages were good stories. But the idea of the personal computer did not spring full-blown from the mind of Steve Jobs. Indeed, the idea that people could use computers to amplify thought and communication, as tools for intellectual work and social activity, was not an invention of the mainstream computer industry nor orthodox computer science, nor even homebrew computerists. If it wasn’t for people like J.C.R. Licklider, Doug Engelbart, Bob Taylor, Alan Kay, it wouldn’t have happened. But their work was rooted in older, equally eccentric, equally visionary, work, so I went back to piece together how Boole and Babbage and Turing and von Neumann — especially von Neumann — created the foundations that the later toolbuilders stood upon to create the future we live in today. You can’t understand where mind-amplifying technology is going unless you understand where it came from.

howard rheingold’s | tools for thought

Staff Engineer

At most technology companies, you’ll reach Senior Software Engineer, the career level, in five to eight years. At that point your path branches, and you have the opportunity to pursue engineering management or continue down the path of technical excellence to become a Staff Engineer.

Over the past few years we’ve seen a flurry of books unlocking the engineering manager career path, like Camille Fournier’s The Manager’s Path, Julie Zhuo’s The Making of a Manager and my own An Elegant Puzzle. The management career isn’t an easy one, but increasingly there is a map available

Stories of reaching Staff-plus engineering roles – StaffEng | StaffEng

News about SQL DATA LENS

SQL Data Lens is a powerful and optimized tool specifically designed for managing and interacting with databases on the InterSystems IRIS and Caché platforms. Here are the detailed aspects of SQL Data Lens:

- Optimization for InterSystems Platforms:

SQL Data Lens is highly optimized for the unique features of InterSystems IRIS and InterSystems Caché databases, making it an ideal choice for developers, administrators, and data analysts working with these platforms - Native Interoperability:

The tool showcases native interoperability by allowing seamless connections to the InterSystems Caché & InterSystems IRIS databases, among others. It facilitates organizing these connections into groups and sub-groups as per business requirements【26†(sqldatalens.com)】. - Intelligent SQL Editor:

It features an intelligent SQL editor that supports complex SQL query writing and editing. The editor provides real-time visual cues like table columns, primary, and foreign keys as users type, aiding in the construction of complex scripts for dynamic execution in varying database contexts - Cross Database Queries:

With its Local Query Cloud feature, SQL Data Lens supports cross-database queries across multiple servers and namespaces. It even allows data combination from other sources like Microsoft SQL Server, Microsoft Access, or simple CSV files without requiring any server-side installation - Database Visualization:

Users can visualize the database structure using database diagrams that graphically represent tables, columns, keys, and relationships within the database, aiding in better understanding and management of the data structure - Performance Enhancement:

SQL Data Lens is built from the ground up focusing on optimizing performance for InterSystems Caché and InterSystems IRIS databases. It aims to provide seamless, lightning-fast data exploration, significantly enhancing the data analysis process. - Ease of Use:

The tool is described as easy to use with a straightforward connection process to the databases. It includes drivers for InterSystems IRIS and Caché databases in many different versions, facilitating simple connections to the databases for various versions - Streamlined Data Management:

SQL Data Lens aims to streamline data management tasks by seamlessly querying, managing, and transforming data in one powerful tool, making data management tasks more efficient and effective - Software Updates and Licensing:

It appears that SQL Data Lens has had updates to its licensing system along with the addition of new drivers for InterSystems IRIS in recent versions, indicating active development and support for the tool

SQL Data Lens is more than a generic database tool; it is specialized for the needs of InterSystems IRIS and Caché database management, offering a range of features to improve database interaction, analysis, and management for its users.