Abstract

2401.09621.pdf (arxiv.org)

arXiv:2401.09621v1 [cs.DB] 17 Jan 2024

Contemporary approaches to data management are increasingly

relying on unified analytics and AI platforms to foster collabora

tion, interoperability, seamless access to reliable data, and high

performance. Data Lakes featuring open standard table formats

such as Delta Lake, Apache Hudi, and Apache Iceberg are central

components of these data architectures. Choosing the right format

for managing a table is crucial for achieving the objectives men

tioned above. The challenge lies in selecting the best format, a task

that is onerous and can yield temporary results, as the ideal choice

may shift over time with data growth, evolving workloads, and the

competitive development of table formats and processing engines.

Moreover, restricting data access to a single format can hinder data

sharing resulting in diminished business value over the long term.

The ability to seamlessly interoperate between formats and with

negligible overhead can effectively address these challenges. Our

solution in this direction is an innovative omni-directional transla

tor, XTable, that facilitates writing data in one format and reading

it in any format, thus achieving the desired format interoperability.

In this work, we demonstrate the effectiveness of XTable through

application scenarios inspired by real-world use cases

Kategorie: Alles andere

How to Detect Locking and Blocking In Your Analysis Services Environment

How to Detect Locking and Blocking In Your Analysis Services Environment – byoBI.com (wordpress.com)

3 Methods for Shredding Analysis Services Extended Events – byoBI.com (wordpress.com)

Introduction To Analysis Services Extended Events – Mark Vaillancourt (markvsql.com)

<!-- This script supplied by Bill Anton http://byobi.com/blog/2013/06/extended-events-for-analysis-services/ -->

<Create

xmlns="http://schemas.microsoft.com/analysisservices/2003/engine"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:ddl2="http://schemas.microsoft.com/analysisservices/2003/engine/2"

xmlns:ddl2_2="http://schemas.microsoft.com/analysisservices/2003/engine/2/2"

xmlns:ddl100_100="http://schemas.microsoft.com/analysisservices/2008/engine/100/100"

xmlns:ddl200_200="http://schemas.microsoft.com/analysisservices/2010/engine/200/200"

xmlns:ddl300_300="http://schemas.microsoft.com/analysisservices/2011/engine/300/300">

<ObjectDefinition>

<Trace>

<ID>MyTrace</ID>

<!--Example: <ID>QueryTuning_20130624</ID>-->

<Name>MyTrace</Name>

<!--Example: <Name>QueryTuning_20130624</Name>-->

<ddl300_300:XEvent>

<event_session name="xeas"

dispatchLatency="1"

maxEventSize="4"

maxMemory="4"

memoryPartitionMode="none"

eventRetentionMode="allowSingleEventLoss"

trackCausality="true">

<!-- ### COMMAND EVENTS ### -->

<!--<event package="AS" name="CommandBegin" />-->

<!--<event package="AS" name="CommandEnd" />-->

<!-- ### DISCOVER EVENTS ### -->

<!--<event package="AS" name="DiscoverBegin" />-->

<!--<event package="AS" name="DiscoverEnd" />-->

<!-- ### DISCOVER SERVER STATE EVENTS ### -->

<!--<event package="AS" name="ServerStateDiscoverBegin" />-->

<!--<event package="AS" name="ServerStateDiscoverEnd" />-->

<!-- ### ERRORS AND WARNING ### -->

<!--<event package="AS" name="Error" />-->

<!-- ### FILE LOAD AND SAVE ### -->

<!--<event package="AS" name="FileLoadBegin" />-->

<!--<event package="AS" name="FileLoadEnd" />-->

<!--<event package="AS" name="FileSaveBegin" />-->

<!--<event package="AS" name="FileSaveEnd" />-->

<!--<event package="AS" name="PageInBegin" />-->

<!--<event package="AS" name="PageInEnd" />-->

<!--<event package="AS" name="PageOutBegin" />-->

<!--<event package="AS" name="PageOutEnd" />-->

<!-- ### LOCKS ### -->

<!--<event package="AS" name="Deadlock" />-->

<!--<event package="AS" name="LockAcquired" />-->

<!--<event package="AS" name="LockReleased" />-->

<!--<event package="AS" name="LockTimeout" />-->

<!--<event package="AS" name="LockWaiting" />-->

<!-- ### NOTIFICATION EVENTS ### -->

<!--<event package="AS" name="Notification" />-->

<!--<event package="AS" name="UserDefined" />-->

<!-- ### PROGRESS REPORTS ### -->

<!--<event package="AS" name="ProgressReportBegin" />-->

<!--<event package="AS" name="ProgressReportCurrent" />-->

<!--<event package="AS" name="ProgressReportEnd" />-->

<!--<event package="AS" name="ProgressReportError" />-->

<!-- ### QUERY EVENTS ### -->

<!--<event package="AS" name="QueryBegin" />-->

<event package="AS" name="QueryEnd" />

<!-- ### QUERY PROCESSING ### -->

<!--<event package="AS" name="CalculateNonEmptyBegin" />-->

<!--<event package="AS" name="CalculateNonEmptyCurrent" />-->

<!--<event package="AS" name="CalculateNonEmptyEnd" />-->

<!--<event package="AS" name="CalculationEvaluation" />-->

<!--<event package="AS" name="CalculationEvaluationDetailedInformation" />-->

<!--<event package="AS" name="DaxQueryPlan" />-->

<!--<event package="AS" name="DirectQueryBegin" />-->

<!--<event package="AS" name="DirectQueryEnd" />-->

<!--<event package="AS" name="ExecuteMDXScriptBegin" />-->

<!--<event package="AS" name="ExecuteMDXScriptCurrent" />-->

<!--<event package="AS" name="ExecuteMDXScriptEnd" />-->

<!--<event package="AS" name="GetDataFromAggregation" />-->

<!--<event package="AS" name="GetDataFromCache" />-->

<!--<event package="AS" name="QueryCubeBegin" />-->

<!--<event package="AS" name="QueryCubeEnd" />-->

<!--<event package="AS" name="QueryDimension" />-->

<!--<event package="AS" name="QuerySubcube" />-->

<!--<event package="AS" name="ResourceUsage" />-->

<!--<event package="AS" name="QuerySubcubeVerbose" />-->

<!--<event package="AS" name="SerializeResultsBegin" />-->

<!--<event package="AS" name="SerializeResultsCurrent" />-->

<!--<event package="AS" name="SerializeResultsEnd" />-->

<!--<event package="AS" name="VertiPaqSEQueryBegin" />-->

<!--<event package="AS" name="VertiPaqSEQueryCacheMatch" />-->

<!--<event package="AS" name="VertiPaqSEQueryEnd" />-->

<!-- ### SECURITY AUDIT ### -->

<!--<event package="AS" name="AuditAdminOperationsEvent" />-->

<event package="AS" name="AuditLogin" />

<!--<event package="AS" name="AuditLogout" />-->

<!--<event package="AS" name="AuditObjectPermissionEvent" />-->

<!--<event package="AS" name="AuditServerStartsAndStops" />-->

<!-- ### SESSION EVENTS ### -->

<!--<event package="AS" name="ExistingConnection" />-->

<!--<event package="AS" name="ExistingSession" />-->

<!--<event package="AS" name="SessionInitialize" />-->

<target package="Package0" name="event_file">

<!-- Make sure SSAS instance Service Account can write to this location -->

<parameter name="filename" value="C:\SSASExtendedEvents\MyTrace.xel" />

<!--Example: <parameter name="filename" value="C:\Program Files\Microsoft SQL Server\MSAS11.SSAS_MD\OLAP\Log\trace_results.xel" />-->

</target>

</event_session>

</ddl300_300:XEvent>

</Trace>

</ObjectDefinition>

</Create>

/****

Base query provided by Francesco De Chirico

http://francescodechirico.wordpress.com/2012/08/03/identify-storage-engine-and-formula-engine-bottlenecks-with-new-ssas-xevents-5/

****/

SELECT

xe.TraceFileName

, xe.TraceEvent

, xe.EventDataXML.value('(/event/data[@name="EventSubclass"]/value)[1]','int') AS EventSubclass

, xe.EventDataXML.value('(/event/data[@name="ServerName"]/value)[1]','varchar(50)') AS ServerName

, xe.EventDataXML.value('(/event/data[@name="DatabaseName"]/value)[1]','varchar(50)') AS DatabaseName

, xe.EventDataXML.value('(/event/data[@name="NTUserName"]/value)[1]','varchar(50)') AS NTUserName

, xe.EventDataXML.value('(/event/data[@name="ConnectionID"]/value)[1]','int') AS ConnectionID

, xe.EventDataXML.value('(/event/data[@name="StartTime"]/value)[1]','datetime') AS StartTime

, xe.EventDataXML.value('(/event/data[@name="EndTime"]/value)[1]','datetime') AS EndTime

, xe.EventDataXML.value('(/event/data[@name="Duration"]/value)[1]','bigint') AS Duration

, xe.EventDataXML.value('(/event/data[@name="TextData"]/value)[1]','varchar(max)') AS TextData

FROM

(

SELECT

[FILE_NAME] AS TraceFileName

, OBJECT_NAME AS TraceEvent

, CONVERT(XML,Event_data) AS EventDataXML

FROM sys.fn_xe_file_target_read_file ( 'C:\SSASExtendedEvents\MyTrace*.xel', null, null, null )

) xe

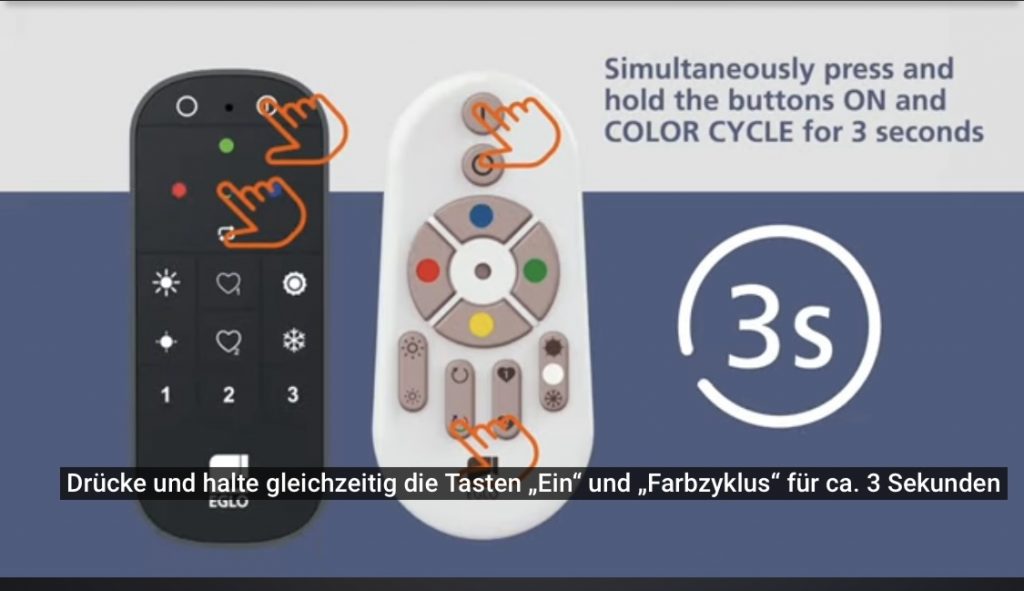

Reset Remote Control 99099 – RCU

Comparison of the Open Source Query Engines: Trino and StarRocks

StarRocks is a Native Vectorized Engine implemented in C++, while Trino is implemented in Java and uses limited vectorization technology. Vectorization technology helps StarRocks utilize CPU processing power more efficiently. This type of query engine has the following characteristics:

- It can fully utilize the efficiency of columnar data management. This type of query engine reads data from columnar storage, and the way they manage data in memory, as well as the way operators process data, is columnar. Such engines can use the CPU cache more effectively, improving CPU execution efficiency.

- It can fully utilize the SIMD instructions supported by the CPU. This allows the CPU to complete more data calculations in fewer clock cycles. According to data provided by StarRocks, using vectorized instructions can improve overall performance by 3-10 times.

- It can compress data more efficiently to greatly reduce memory usage. This makes this type of query engine more capable of handling large data volume query requests.

In fact, Trino is also exploring vectorization technology. Trino has some SIMD code, but it’s behind compared to StarRocks in terms of depth and coverage. Trino is still working on improving their vectorization efforts (read https://github.com/trinodb/trino/issues/14237). Meta’s Velox project aims to use vectorization technology to accelerate Trino queries. However, so far, very few companies have formally used Velox in production environments.

Comparison of the Open Source Query Engines: Trino and StarRocks

Comparison of the Open Source Query Engines: Trino and StarRocks

The difference between trino and dremio

Apache Trino (formerly known as PrestoSQL) and Dremio are both distributed query engines, but they are designed with different architectures and use cases in mind. Here’s a comparison of the two:

Apache Trino

- Query Engine: Trino is a distributed SQL query engine designed for interactive analytic queries against various data sources of all sizes, from gigabytes to petabytes. It’s particularly optimized for OLAP (Online Analytical Processing) queries.

- Data Federation: Trino allows querying data where it lives, without the need to move or copy the data. It can query multiple sources simultaneously and supports a wide variety of data sources like HDFS, S3, relational databases, NoSQL databases, and more.

- Performance: Trino is designed for fast query execution and is capable of providing results in seconds. It achieves high performance through in-memory processing and distributed query execution.

- Use Cases: Trino is primarily used for interactive analytics, where users execute complex queries and expect quick results. It’s suitable for data analysts and scientists who need to perform ad-hoc analysis across different data sources.

- Statelessness: Trino’s architecture is stateless, which means it doesn’t store any data itself. It processes queries and retrieves data directly from the source.

Dremio

- Data-as-a-Service Platform: Dremio is not just a query engine; it’s a data-as-a-service platform that provides tools for data exploration, curation, and acceleration. It offers a more integrated solution compared to Trino’s specialized query engine.

- Data Reflections: One of Dremio’s key features is its use of data reflections, which are optimized representations of data that can significantly speed up query performance. These reflections allow Dremio to provide faster responses to queries by avoiding full scans of the underlying data.

- Data Catalog: Dremio includes a data catalog that helps users discover and curate data. It provides a unified view of all data sources, making it easier for users to find and access the data they need.

- Data Lineage: It offers data lineage features, providing visibility into how data is transformed and used across the platform, which is beneficial for governance and compliance.

- Use Cases: Dremio is suited for organizations looking for a comprehensive data platform that can handle data exploration, curation, and query acceleration. It’s beneficial for scenarios where performance optimization and data management are critical.

Summary

- Trino is a high-performance, distributed SQL query engine designed for fast, ad-hoc analytics across various data sources. It’s focused on query execution and is best suited for environments where the primary requirement is to run interactive, complex queries over large datasets.

- Dremio offers a broader set of features beyond just query execution, including data curation, cataloging, and acceleration. It’s designed as a data-as-a-service platform that can help organizations manage and optimize their data for various analytics and BI use cases.

Choosing between Trino and Dremio depends on the specific needs of the organization. If the primary need is fast, ad-hoc query execution across diverse data sources, Trino might be the better choice. If there’s a requirement for a comprehensive data platform with features like data curation, cataloging, and acceleration, Dremio could be more suitable.

The difference between apache flink and apache trino

Apache Flink and Apache Trino (formerly known as PrestoSQL) are both distributed processing systems, but they are designed for different types of workloads and use cases in the big data ecosystem. Here’s a breakdown of their primary differences:

Apache Flink

- Stream Processing: Flink is primarily known for its stream processing capabilities. It can process unbounded streams of data in real-time with high throughput and low latency. Flink provides stateful stream processing, allowing for complex operations like windowing, joins, and aggregations on streams.

- Batch Processing: While Flink is stream-first, it also supports batch processing. Its DataSet API (now part of the unified Batch/Stream API) allows for batch jobs, treating them as a special case of stream processing.

- State Management: Flink has advanced state management capabilities, which are crucial for many streaming applications. It can handle large states efficiently and offers features like state snapshots and fault tolerance.

- APIs and Libraries: Flink offers a variety of APIs (DataStream API, Table API, SQL API) and libraries (CEP for complex event processing, Gelly for graph processing, etc.) for developing complex data processing applications.

- Use Cases: Flink is ideal for real-time analytics, monitoring, and event-driven applications. It’s used in scenarios where low latency and high throughput are critical, and where the application needs to react to data in real-time.

Apache Trino

- SQL Query Engine: Trino is a distributed SQL query engine designed for interactive analytic queries against data sources of all sizes ranging from gigabytes to petabytes. It’s not a database but rather a way to query data across various data sources.

- OLAP Workloads: Trino is optimized for OLAP (Online Analytical Processing) queries and is capable of handling complex analytical queries against large datasets. It’s designed to perform ad-hoc analysis at scale.

- Federation: One of the key features of Trino is its ability to query data from multiple sources seamlessly. This means you can execute queries that join or aggregate data across different databases and storage systems.

- Speed: Trino is designed for fast query execution and can provide results in seconds. It achieves this through techniques like in-memory processing, optimized execution plans, and distributed query execution.

- Use Cases: Trino is used for interactive analytics, where users need to run complex queries and get results quickly. It’s often used for data exploration, business intelligence, and reporting.

Summary

- Flink is best suited for real-time streaming data processing and applications where timely response and state management are crucial.

- Trino excels in fast, ad-hoc analysis over large datasets, particularly when the data is spread across different sources.

Choosing between Flink and Trino depends on the specific requirements of the workload, such as the need for real-time processing, the complexity of the queries, the size of the data, and the latency requirements.

Reset des Südwind Ambientika wireless+

Wenn der Südwind Ambientika wireless+ nur noch im Feuchtigkeitsbetrieb ausgeführt wird (Feuchtigkeitsalarm = rote LED leuchtet und nur noch Abluftbetrieb), gibt die Anleitung zur Fehlerbehebung folgenden Tipp: „Erhöhen Sie den Schwellenwert für das Eingreifen des Hygrostats“.

Dieser Schwellenwert ist bei mir seit der Inbetriebnahme unverändert auf dem höchsten Wert eingestellt und kann somit das Problem nicht lösen.

Der nette Support der Firma lieferte dann die Lösung. Folgende Aktionen müssen in dieser Reihenfolge ausgeführt werden. Nach danach funktionierte der Lüfter wieder wie gewohnt.

Daszu folgendes am Master ausführen:

- Gerät AUS

- Gerät EIN

- Gerät FILTER RESET

- dann AUTO Modus mit 3 Tropfen Feuchtigkeitsschwelle

Jetzt sollte der Südwind Ambientika wireless+ wieder normal arbeiten.

Commodore C64

https://www.c64.com/

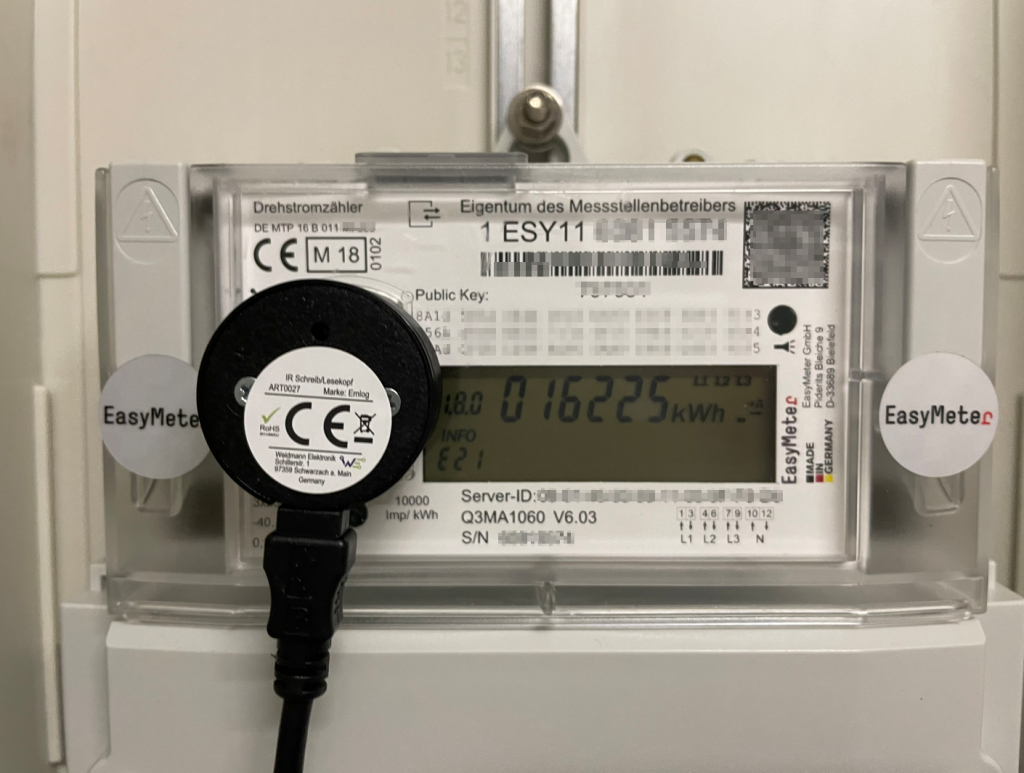

Home Assitent – deye microinverter

GitHub – kbialek/deye-inverter-mqtt: Reads Deye solar inverter metrics and posts them over mqtt

VZLOGGER:

GitHub – markussiebert/homeassistant-addon-vzlogger: vzlogger as addon for homeassistant supervisor

GitHub – volkszaehler/vzlogger: Logging utility for various meters & sensors

SmartMeter ist „freigeblinkt“:

vzlogger ohne middleware: „api“:“null“

The Digital Antiquarian – filfre.net

The Digital Antiquarian (filfre.net)

e.g. read about » Elite (or, The Universe on 32 K Per Day) The Digital Antiquarian (filfre.net)

A universe in 32 K, an icon of British game development, and the urtext of a genre of space-combat simulations, the sheer scope of David Braben and Ian Bell’s game of combat, exploration, and trade can inspire awe even today.

» Hall of Fame The Digital Antiquarian (filfre.net)

The best graphic adventure ever made for the Commodore 64 and the starting point of the LucasArts tradition of saner, fairer puzzling, this intricately nonlinear and endlessly likable multi-character caper deserves a spot here despite a few rough edges.

» A New Force in Games, Part 3: SCUMM The Digital Antiquarian (filfre.net)